Final Major Project - Creating a Responsive Music System for Gaming

- Niamh McCarney

- Nov 23, 2020

- 4 min read

Hey! Thanks for stopping by! My name is Niamh (pronounced N-eev but not spelt like it if you have Irish family) and I am currently in the process of creating a demo of a responsive music system for games as part of the final year of my degree.

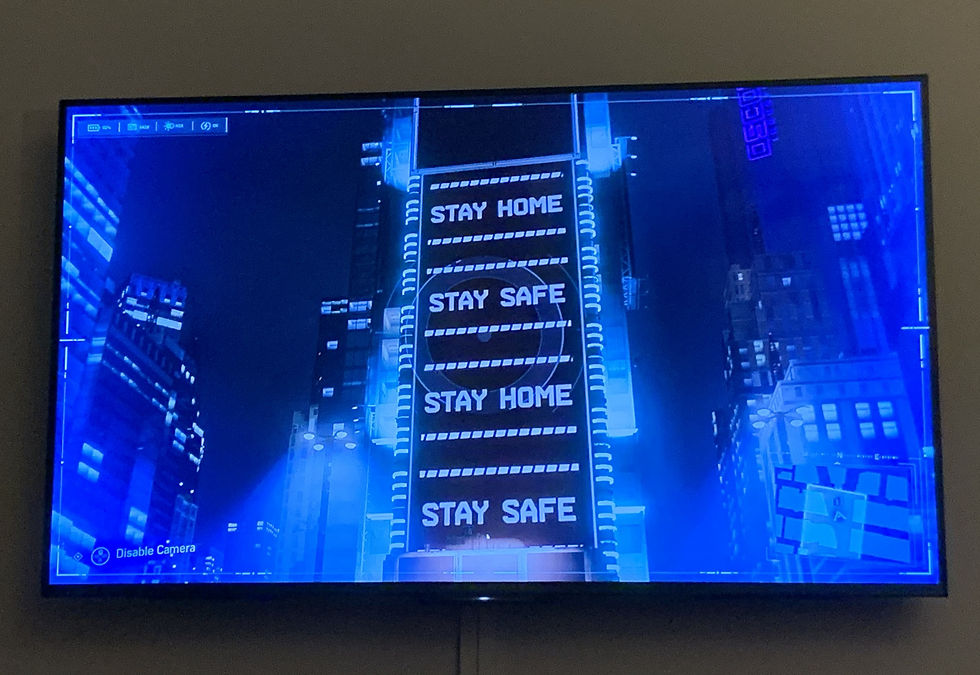

The main inspiration behind this project comes from the, quite frankly extortionate, amount of Spider-Man PS4 I played while I was furloughed. One of the things that really stood out to me in that game was how much the music affected the user experience. This sounds like a really obvious point to make but it wasn't just about the musical content in the game (as wonderful as it is), what really struck me was how the music was being used. As we all know, Marvel movies are now a huge market grossing millions of dollars on opening weekends, and Spider-Man is no exception! Paired with its amazing artwork and graphics, the way in which the music in the Spider-Man PS4 game works makes the player not only feel like they are the hero but also makes them feel like they are the star of their own movie. They control the scenes, they control the fight choreography and - importantly - they control the music (which is obviously the most important part of any film).

So, why is this different to any other superhero game with a rousing brass section and lush string orchestration? Well, in my opinion it's because the music responds somewhat dynamically to the actions happening on screen. I tweeted about this recently and got a response from Lewis Barn (@lewisbarn) who had actually interviewed Rob Goodson, the music editor for Spider-Man PS4 (you can read the article for yourself here: https://medium.com/storiusmag/spider-mans-dynamic-music-14d776f5a43b) . Goodson explained that the music system was designed to respond to a number of variables in the game and that the music would change in accordance with those variables. In other words, in the same way that a film composer will consider a number of things that are happening on screen when writing music for a particular scene, the music system here is doing a similar thing by taking key information from the game and crafting its musical response accordingly. The end result is that the whole experience of playing Spider-Man feels very cinematic because it feels like the music is reacting your specific situation and not just playing a generic music loop whenever you're in a fight or swinging from one place to another.

So this was my challenge; to create a game music system, and music assets, that could respond to some of the specifics of a situation that a player found themselves in.

I'm currently in the 'planning phase' of this project, so just getting an idea of what kind of musical style I want to use, the specifics of which variable will control which feature and a rough idea of the tools that I'll be using.

My initial thoughts are to use Wwise and Unity for implementation purposes and create all the audio assets within Reaper. The Unity project would basically consist of a simple slider interface which would just be used for demonstration purposes. I thought this would be the best approach because of its relatively simple setup but ability for the related scripts to be reapplied in different contexts using different variables.

As part of the planning process I ran my ideas past a few people in industry including Kat Welsford from Square Enix (@popelady) , Bogdan Vera from Media Molecule (@BogdanVera) and Fred Horgan from Bossa Studios(@FredHorgan). From initial conversations with them, it looks like the best approach to take would be to keep things simple and focus on a few common parameters, like position and velocity, that could be connected to RTPC curves in Wwise to begin with and then focus on ways that this system could possibly branch out later on to use things like telemetry and prediction. This way I could demonstrate how the basics of the system could be reapplied and not just be used for games where you're a quick-witted web-slinging guy in lycra.

In addition to the RTPC curves, I would also like to make use of game states within Wwise to make sure that what the player is doing is the main factor affecting the musical tone and that how the player is doing it is just being used to add a slightly more 'bespoke' touch.

In terms of the musical composition, vertical mixing with lots of stems that could gradually fade in an out depending on object properties seems to be the way to go. One of the problems with this kind of system, however, is how the music will respond to sharp transitions without sounding too jarring so this is something that I'm going to need to take into account during both the creation and implementation of the music.

My next steps for the project are going to be coming up with a clearer vision fo the final product, making decisions about what kind of game states I would like to write music for, which variables I would like to contribute to the music and what affect they're going to have so I can bear this in mind while I'm creating the assets. Once all that's done, it's onto the creation!

I'll update this blog as I go and share any progress that I make but if you want to ask any questions about what I'm doing or want to get in touch, feel free to tweet at me (@rhymeswithleave) or drop me an email at niamh@nmaudio.co.uk.

A massive thanks again to Lewis, Kat, Bogdan and Fred for all their help! I really appreciate it! You can follow them on twitter at @lewisbarn, @popelady, @BogdanVera and @FredHorgan respectively (and you definitely should!).

See you in the next update!

Niamh :)

Comments